tECHNOLOGIST, RESEARCHER, leader

ATLAS VERNIER

atlas@vt.edu

Photo by Benjamin Gozzi.

I am Atlas Vernier, a student, researcher, and leader at Virginia Tech. I first found a love for engineering during my first internship at NASA Langley in 2017, furthering our technological capabilities in the engineering workflow and on the space frontier. Ultimately, I aim to lead an international interdisciplinary team focusing on in-space and on-Earth technological capabilities: from autonomous collaborative robotics to augmented and virtual reality.

I am currently a graduate student at Virginia Tech (Blacksburg, VA, USA) pursuing my master’s degree in Industrial & Systems Engineering, concentrating on Human Factors and Human-Computer Interactions. I completed dual undergraduate degrees in Industrial & Systems Engineering (ISE) and French. The ISE courseload is a unique opportunity to study systems and processes, which provides a strong foundation to enter into engineering administration.

During my time at NASA Langley Research Center, I spoke with various directorate leaders concerning communicating with the Centre national d’études spatial and the European Space Agency. As an engineer and a CAD technician, I understand the acute importance of accurate communication. However, more importantly, it is critical to recognize and respect cultural differences in order to strengthen international relationships.

Having found a passion for the French language in high school, I continued my learning at Virginia Tech. These courses allow me to develop my skills in the language to be fluent in speech, reading, and writing. I have also taken foundational Russian courses to increase my skills in communications.

My dream job after graduation is to serve as a liaison between NASA and our international counterparts for global technological development.

Concentration: Human Factors Engineering & Ergonomics

Affective Design and Computing

Socio-Technical Systems: Principles, Concepts, and Design

System Dynamics Modeling of Socio-Technical Systems

Social Computing & Computer-Supported Cooperation

Decision Analysis for Engineers

Mission Engineering I

Visual Display Systems

Cognitive Work & Task Analysis

Computer Music & Multimedia (Music Technology)

Human Factors Research Design

Human Information Processing

Human Factors System Design

Human Physical Capabilities

Special Study: Introduction to Immersive Multimedia

Special Study: Engineering Communication

Global Issues of Industrial Management

Theory of Organization

Project Management and Systems Design I & II

Statistical Quality Control

Risk and Hazard Control

Production Planning and Inventory Control

Human Factors Ergonomics Engineering

Technical Communications for Engineers

Advanced Topics in Business French

French for Oral Proficiency

Advanced Composition and Style

Grammar, Composition, and Conversation I

Grammar, Composition, and Conversation II

French Life, Literature, and Language

Introduction to French Literature

Introduction to Francophone Studies

Medieval-Renaissance Culture

French Culture from Baroque to Revolution

IDPro is a hands-on, project-centered research course that groups students according to skill and research interest into intentionally interdisciplinary teams. These student teams will go on to scope their project goals and drive their own progress, all while communicating with clients and mentors from industry and academia—all with the goal of encouraging students to grow beyond their disciplines personally and professionally.

Founded by myself and Dr. Lisa McNair in Fall 2023, IDPro has grown from a group of 34 students to include three GTAs, three teaching faculty, and over 180 students (as of Spring 2026). I’ve had the opportunity to develop the educational structure and workflows that will help them succeed, as well as to mentor students so that their growth will extend beyond the classroom.

Engineering Communications is a graduate-level course designed to develop skills in technical writing, presentations, and poster design by providing specific, tailored feedback based on weekly submissions. As the grader for the course, it is my responsibility to showcase excellence in technical and interpersonal communication through continual examples, daily communications, and course expectations.

With the support of Dr. Kathleen Carper, I guest lectured on the importance of abstracts and the process of writing one to four sections of undergraduate students.

The Institute for Creativity, Arts, and Technology (ICAT) is an transdisciplinary group of artists, designers, and technologists from across the university advancing research, innovation, and education.

I am proud to serve as a technologist, using my skills in a variety of areas to support ICAT projects and collaborate with other on-campus organizations such as VCOM and ARIES. As a researcher, I support the creation of technological experiences using motion capture and immersive audio. I also explore new and emerging modalities of data collection, as well as the development of workflows for human research. I will also have the chance to work alongside the Center for Educational Networks and Impacts to develop transdisciplinary educational experiences for students at Virginia Tech!

Open the Gates Gaming is an organization that seeks to create an inclusive and accessible environment for storytelling and human connection.

Our work is two-fold. First, our team designs and tests systems of open-access tools that allow disabled and neurodivergent players to engage with tabletop roleplaying games without altering the rules of gameplay. Play—and connection—is a human right, and these tools lay a foundation to allow everyone a seat at the table.

The other half of our work is centered around stories. We write stories that allow players to engage with complex historical stories, wrestle with exclusionary narratives, and craft their own hero’s journey. This empowers players to tell the stories that are important to them, but it also allows them to engage with difficult topics face-to-face.

Space Jam was a project developed during the two-week winter semester of 2023. Users are immersed into a virtual audio environment. While holding a glowing orb—a planet of their very own—they are able to traverse the solar system to hear the sounds of each planet. Each planet has its own dedicated theme, including key, instrumentation, and arpeggiation. These themes are generated using live data; no matter how long you stay in a planet’s orbit, you will always hear new music!

Interactive Audio Environments

Space Jam utilized a multitude of systems available in the Cube, including the cyclorama, spatialized audio system, motion capture system, and floor projection. These systems were linked together to create a cohesive and immersive experience.

Special thanks to David Franusich, Sooruj Bhatia, Xiang Li, Brandon Hale, Tanner Upthegrove, and ICAT for enabling this work.

The Applied Research in Immersive Experiences and Simulations, also known as ARIES, is a team of students and faculty working across the university to create accessible tools and workflows using a variety of technological tools. We aim to push the boundaries of what is possible, developing both hardware and software solutions to fulfill the needs of our research partners. Students are encouraged to learn new programs and bring our ideas to life using virtual and augmented reality, while also applying the lessons we learn to content creation and educational programs. Our projects span dozens of university departments, including creative technologies, computer science, performing arts, history, French, and archaeology.

Motion Capture

Our motion capture system lives on the fourth floor of Newman Library. We utilize an OptiTrack motion capture system, which is mounted on trusses inside 4030.

For human capture, markers are placed at specific points over the body to indicate joint and extremity movement. The marker placement depends on the type of capture needed, and the OptiTrack system allows for sub-millimeter precision. This movement data is then able to be sent to Unreal for dynamic rendering in real time, as pictured above. This will ultimately be used to create immersive experiences with full-body movement. In addition, there is a use-case for simulation and training: for example, a soccer player in a VR headset and motion capture suit could be presented with potential game-day scenarios.

Vauquois

The Vauquois project is centered around the village of Vauquois, the remains of which reside in the rural area of France. The village was destroyed during WWI due to its strategic location; dozens of tunnels and mines still remain under the village. The destruction of Vauquois was well-documented due to its presence in the war; however, not much remains of life before its destruction. ARIES focuses on daily life in the village and the creation of historically accurate buildings.

The digital restoration of Vauquois is in Unreal. Larger buildings, such as the church and school, have been modeled by hand, while many of the homes and other buildings are dynamically generated using a novel code for custom generation. The tunnels underneath the village have been documented using point cloud and photogrammetry technologies.

Construction Safety

The construction safety project was focused around visualizing the flow of traffic and changes to the road during an ongoing construction project. This included testing visibility in variable weather and lighting.

This image was rendered in Twinmotion. Because of the iteration speed of this project, I learned how to use the software very rapidly, jumping into creating complex renders and simulations within a week.

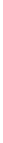

The Field and Space Experimental Robotics Laboratory (FASER Lab) is a research group in the Mechanical Engineering that aims to develop advanced methods for robotic collaboration and construction on the ground and in space. In order to create such novel approaches to robotic issues, we conduct research centered around variety, distribution, estimation, sequencing, autonomy, and serviceability.

For more info, see the FASER Lab research page here.

Lightweight Surface Manipulation System

The LSMS: a 14-foot tendon-actuated manipulator intended to make the large-scale motions in collaborative robotic assemblies.

Mobile Assembly Robotic Collaborator

One of two MARC robots used for our error detection and correction research, using truss assembly as an initial use-case.

RASC-AL

The FASER Lab competed in the RASC-AL Moon to Mars Ice and Prospecting Challenge, in collaboration with two senior design teams. The Overburden Layer Ice to Vapor Extraction Robot - OLIVER - was one of ten finalists at NASA Langley Research Center in 2019.

As of January 2021, I have completed four internships at NASA Langley Research Center in the Office of the Chief Information Officer. Over the course of these internships, my team and I developed unique solutions in augmented and virtual reality (AR/VR) that emphasize the strengths of the virtual toolset while interacting with a physical environment.

The Shackleton Crater is an ice-bearing crater on the lunar south pole; it is currently one prospective landing location. Due to the low light angle, visibility is difficult, with astronauts facing either blinding sunlight or complete darkness.

To address this, we designed the lunar navigation HUD, pictured below. It featured an overlaid navigation display, with a mini-map and compass. Around the edges, there is relevant suit and exterior metadata, with optional overlays including optimal path, potential dangers, navigational waypoints, and the destination.

We were able to implement a basic version of the HUD in VR and a basic overlay only in AR.

ARVRIS began as the Multiuser Modal Manipulation Tool in late 2017. The first iteration was completed by spring of 2018, including multi-user functionality, push-button model movement, explosion view, individual component selection, and a basic menu.

The transition to ARVRIS, or the Augmented Reality and Virtual Reality Integrated Systems (pictured below) occurred in summer 2019 with the addition of the Engineering Design Studio team. It added dynamic model movement for fine detail control, control labels in the virtual environment, improved stability and framerates, multiple model support, and 3D backgrounds to give the user a sense of orientation. Furthermore, we implemented advanced UI and component selection and in-scene control mapping and importation, rather than importing the models from outside the program.

ARVRIS also includes a set of implemented features, some of which were inherited from the Multiuser Modal Manipulation Tool with optimization for the ARVRIS system. These include:

Object manipulation (rotate, scale, move, push, pull)

Scene teleportation (moving around the scene independent from model movement)

Laser pointers (allowing for more precise object indication, especially in a multiuser situation)

Immersive audio (ambient music and audio cues)

Reassemble/Undo (reassembling from the exploded view)

Satellite Orbit

The satellite orbit demonstration addresses a need on behalf of the GPX SmallSat launch team. It features realistic GPS satellite orbits, which are dynamically generated mathematical ellipses. It allows the visualization of showing distance between existing GPS satellites and the proposed GPX satellite, thus improving the ability to understand distance relationships in VR with a dynamic time scale.

5D Scatterplot

This demonstration was intended to show what is possible for data visualization in mixed reality. We used public databases for the proof-of-concept; the scatterplot pictured below uses a well-known database of flowers. We are able to graph in five dimensions - the standard X, Y, and Z axes, as well as the color and diameter of the points themselves.

The VR CAD Repository is an organized database of 3D models sourced from a variety of branches across NASA Langley, including the Science Directorate, the Engineering Directorate, and the Office of the Chief Information Officer. Prior to my work on the repository, no comparable center-wide system existed.

These models are accurate and to-scale, as well as SBU and ITAR compliant. (Although this may include some degree of sanitization, they maintain a high level of accuracy.) In order to maintain high security, it features multiple permission groups, with different user groups allowing different permissions for model access. It is currently accessible by anyone at Langley through a NAMS request for a Windows Drive Share, with plans to transition to SharePoint Online.

I am a fifth-year member of the Marching Virginians, the Spirit of Tech. During the fall semester, we perform at every home game: a new show for each game. For the musicians on-field, this means learning new drill and music almost every week; we rehearse daily to ensure that we are able to meet and exceed the high standards expected from the Marching Virginians. We also travel with the football team to bowl games, including the 2018 Military Bowl, the 2019 Belk Bowl, and the 2021 Pinstripe Bowl.

I will be a rank captain for the 2022-23 season and served in the same position during the 2021-22 season. As rank captain, I am responsible for overseeing my rank, a group of approximately 9 students. Due to the rapid speed at which we learn, I ensure that each student knows the new material and support them throughout the season. I also help new members and provide additional information, resources, and reminders for rehearsal and game day.

I am proud to work as one of the community translators for a recorded international broadcast on the CR Translate (CRT) team. When translating, the translator is provided with an English transcription and the associated timeslot for the caption, as well as the number of characters we are able to use in the translation. This is to ensure that the caption is in sync with the audio, but it can still be read in a timely fashion. I translate from English to French, ensuring that everything is able to be comprehended by an international audience. This includes the body of the text, verb tenses, context, and jokes that may not be able to be directly translated.

Although I work solely with the French team, CRT translates into 32 languages total, including German, Spanish, Russian, Dutch, and Arabic.

I served as the 2021-22 Director of Corporate Relations for the Virginia Tech IISE. The IISE is the world’s largest professional society dedicated to supporting individuals pursuing careers and studies in industrial engineering. We provide leadership for education, training, research, and development of industrial engineering.

As the Director of Corporate Relations, I was responsible for overseeing all communications with our corporate sponsors. I develop and maintain long-term relationships with each company and work with their representatives to organize multiple events for them to engage with our students.

In spring 2019, I was accepted to the Citizen Science Leadership Team (CSLT) of the Orion Sciences LLC. The Orion Sciences LLC allows first-year students to meet other open-minded students and find support in an academic, professional, and social community.

Members of the CSLT design and oversee projects for students to give them an idea of what working in the scientific and engineering industry will look like. In fall of 2019 and spring of 2020, I led the Scieneering Rocket Project (SRP), which was a collaboration between the College of Science and College of Engineering, providing students with the opportunity to work in a collaborative environment with peers outside of their major, as they likely will after graduation.

CircuiTree 1599 is a competitive robotics team that builds a robot within competition parameters over a six-week period. Once the build season is complete, the robot and team attend regional and international competitions where both are judged on performance, hardware, software, electronics, business, and professionalism. As the Vice President and Administrator, over the course of the 2018 season, I raised over $300,000 in funds, organized team and sponsor functions, and wrote a business plan, which allowed judges to understand our process. This season also held our team’s most prominent successes. For the first time since our rookie year of 2005, CircuiTree travelled to Detroit, Michigan to participate in the World Championship.

During the 2017 season, I was the only person capable of designing high-level CAD models; as such, I was named the CAD team captain. In order to prepare for future years, I trained two novice members. By the end of the season, we assembled over 1,000 parts to create a model. As the season progressed, we updated the model according to changes made on the physical robot. To support the team in competition, we also created blueprints for critical pieces, animations, and stress analyses.